This site lists some of my main projects of the past years – most of which (but not all) evolve around wearable multimodal biosignal instrumentation for neuroimaging and biosignal processing using machine learning. If you want to learn more about a specific project, please see the resources provided underneath each short description or contact me. Most importantly, visit the IBS-Lab website for my academic work of the last years, as this website here is updated only sporadically.

2022+: Intelligent Biomedical Sensing Lab at BIFOLd – TU Berlin

In Nov. 2022 i became head of the independent research group “Intelligent Biomedical Sensing” at BIFOLD, TU-Berlin. The IBS-Lab focuses on wearable sensing and ML-boosted methods for neurotechnology and physiology measurements in naturalistic environments (“Neuroscience in the Everyday World”). Here you can find the Lab’s mission statement (and much more):

And here you can find the Lab’s projects and publications.

2020+: Developing Next-Gen Neuroimagers @NIRx

As the Director of the Research and Development at NIRx from 2020-2022 I was responsible for the development of next-generation neuroimaging instruments. Below are some of the products that we developed with NIRx’ amazing team of developers and scientific consultants. Please check out NIRx’ website for more.

2020 – NIRxBorealis:

This device enables the use of the NIRx’ mobile flagship brain imager NIRSport2 in an (f)MRI environment by providing a Laser/APD -based interface to optical fibers.

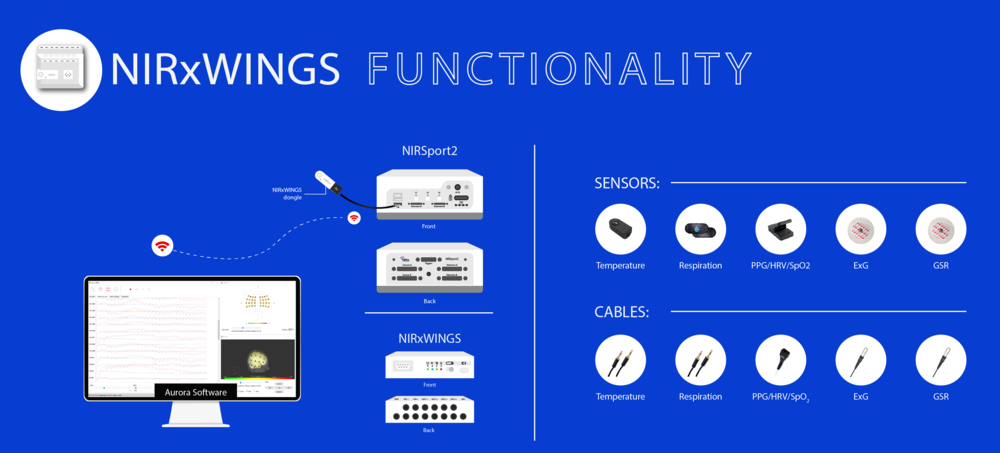

2021 – NIRxWINGS:

The WINGS is a multimodal extension to the NIRSport2, enabling fully integrated and synchronized recording of physiological signals (Electrophysiology – ExG, PPG, StO2, Respiration, Temperature, …)

Resources

- NIRx website

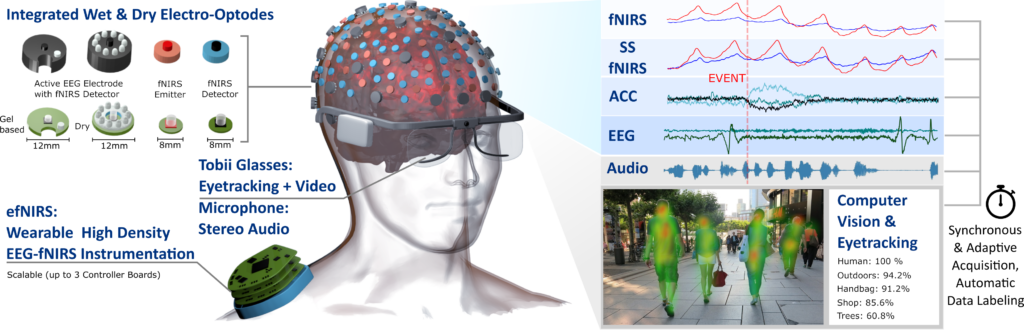

2018-20: Neuroimaging in the Everyday World (NEW) – concept and grant development

Neuroimaging in the everyday world requires interdisciplinary innovation – from novel miniaturized multimodal hardware, to improved signal processing, denoising, and modelling, to automatic labeling and context generation. The NEW concept proposes to combine hybrid wearable high density EEG-fNIRS instrumentation with machine-learning based signal-denoising and stimulus detection and data segmentation using AI-based computer vision and eye tracking. This concept was developed together with David Boas an strongly based on my prevous PhD thesis work – it resulted in a successful NIH U01 grant application in 2020.

Resources: - Talk and Technical Abstract at OSA Biophotonics 2020 - Concept in PhD Thesis at Berlin Institute of Technology (TUB) - M3BA concept in IEEE TBME 2016 publication - technology networks article 2020 on "merging with machines"

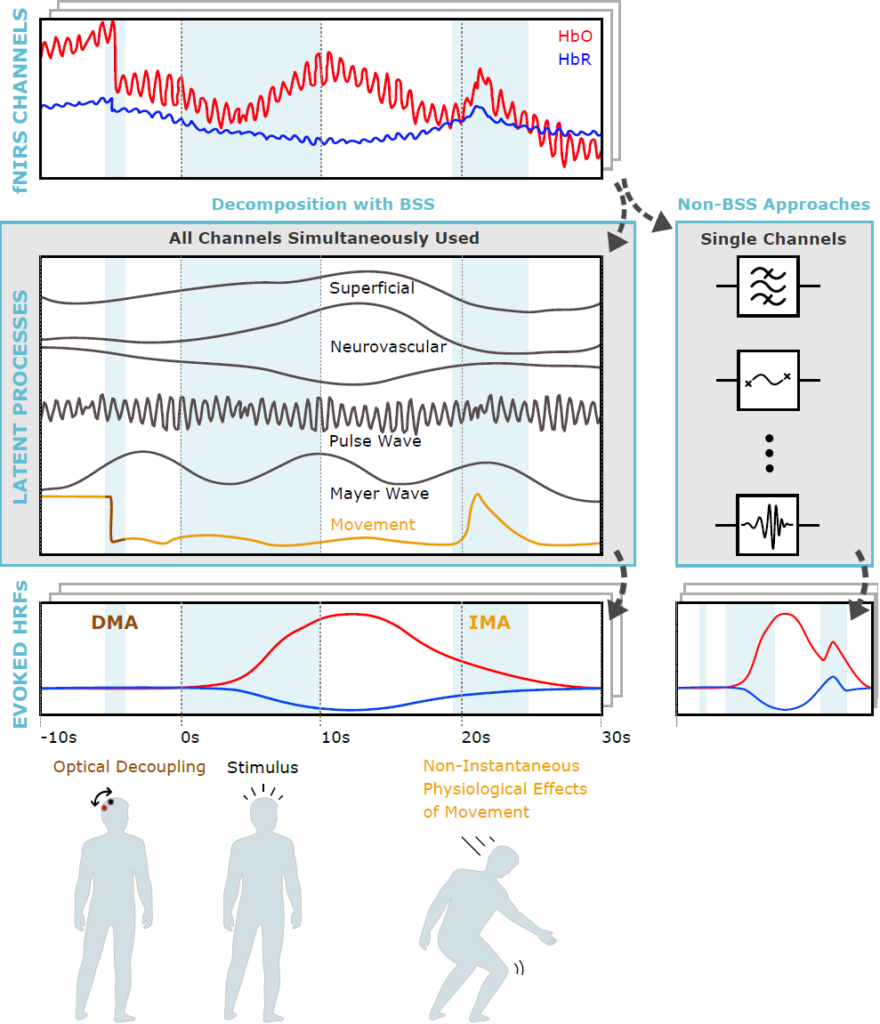

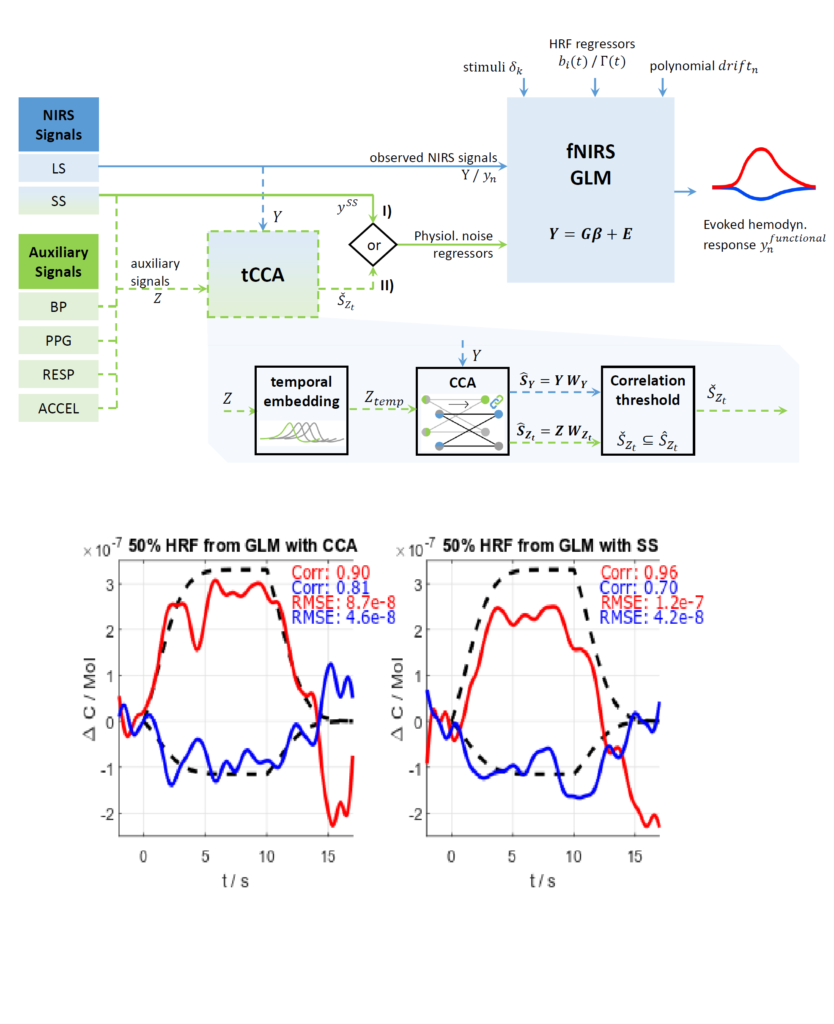

2018-20+: Improving decomposing, modeling and denoising of DOT/fNIRS signals using multimodal Machine Learning

functional Near Infrared Spectroscopy (fNIRS) signals contain a complicated mix of contributions from neurovascular coupling and various sources of systemic physiology. Slow vascular oscillations, respiration, but also effects from movement on blood pressure and perfusion can make it very challenging to robustly estimate brain signals from the raw fNIRS. In my work, i tackle this challenge in two ways: (1) By using unsupervised (blind source separation) machine learning methods, such as Principal Component Analysis (PCA), Independent Component Analysis (ICA) and temporally embedded Canonical Correlation Analysis (tCCA). (2) By combining best practice supervised approaches, such as the General Linear Model (GLM) with state of the art unsupervised machine learning and multimodal signals using tCCA.

Resources: - Neuroimage 2020 publication on the improvement of the performance of General Linear Model for fNIRS by using multimodal tCCA regressors - Neuroimage 2019 publication on fNIRS blind source separation using PCA, ICA and tCCA and multimodality - Signal processing chapter in PhD Thesis at Berlin Institute of Technology (TUB)

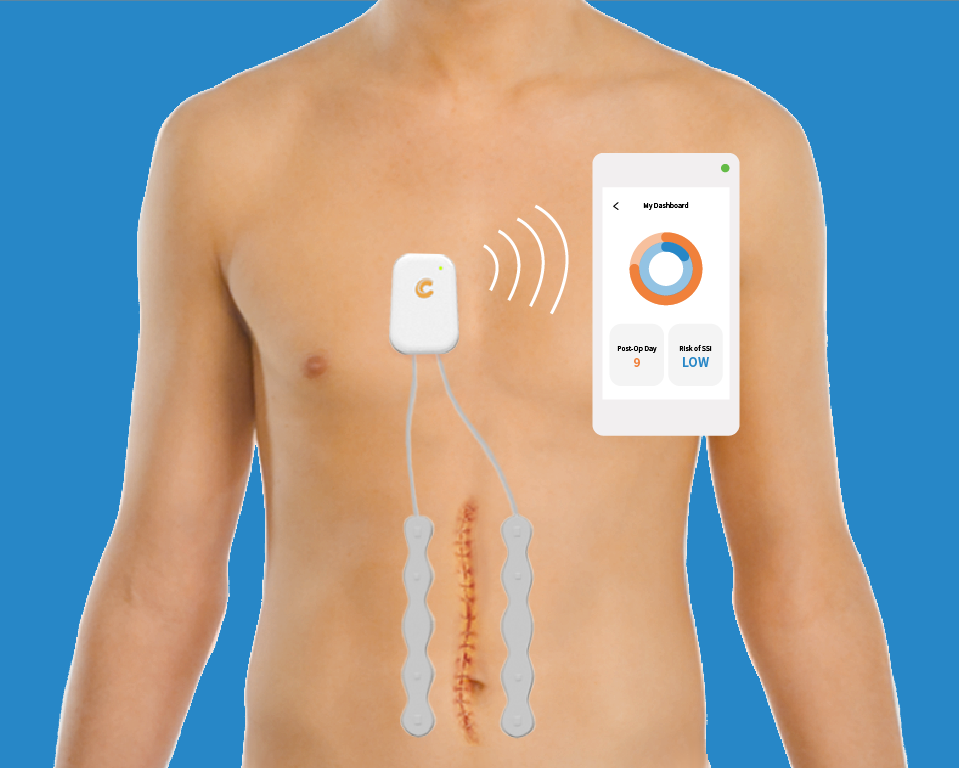

2018-20+: Wearable smart dressing for AI-based prediction of Surgical Site Infections @Crely Healthcare Singapore

Crely Healthcare Pte. Ltd (Singapore & USA) is a multinational healthcare startup that aims to provide a Software as a Service solution to predict and prevent Surgical Site Infections by combining multimodal smart wearable sensing and Artificial Intelligence. Being Crely’s CTO from 2018-2020, I helped move this mission forward by performing concept and prototype development and consulting on system architecture, animal study design and validation. Arun Sethuraman, Crely’s CTO, is a Harvard Business School alumnus, previously working in leading global roles at Stryker and Merck. It has been a great pleasure and learning experience to work with him on this project!

Resources: - crely website

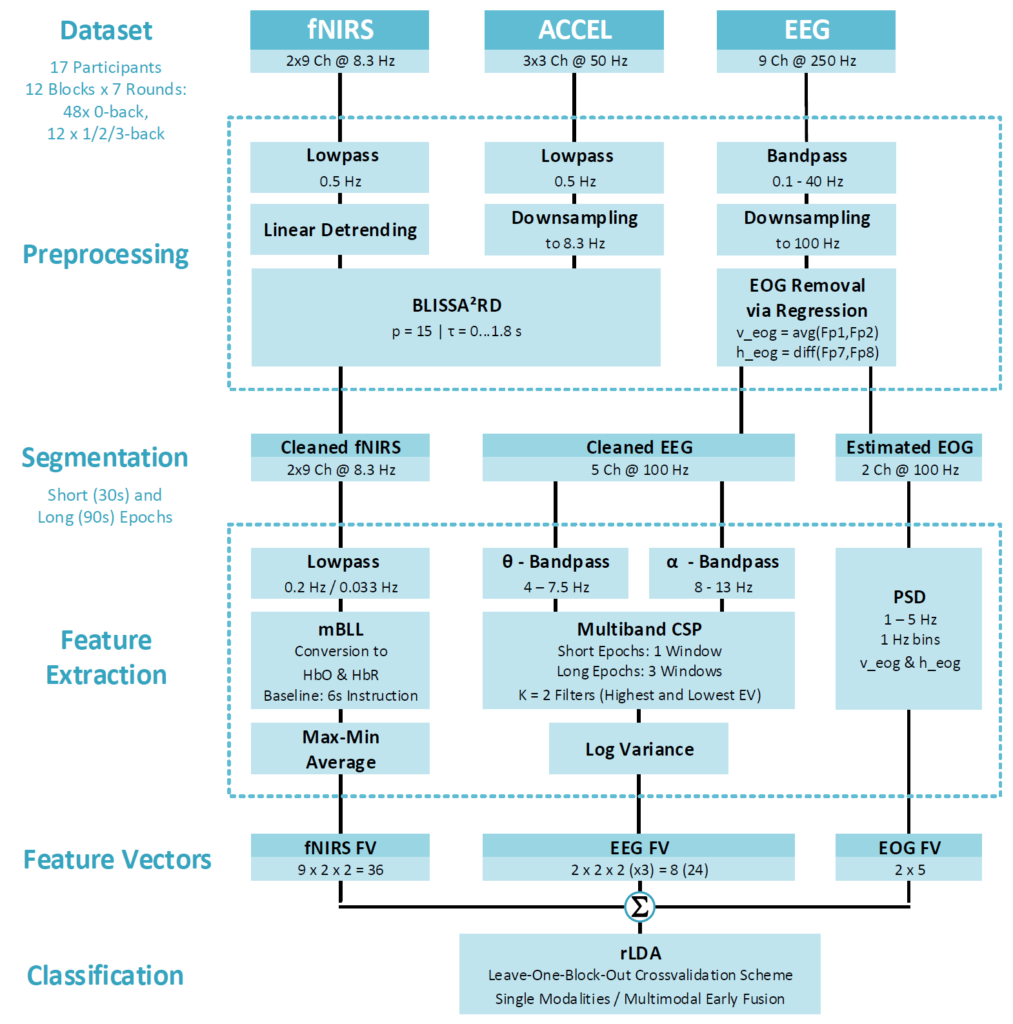

2014-20+: Machine Learning for single trial analysis of EEG and fNIRS brain signals and Brain Computer Interfaces | Multimodal Datasets

To analyze and classify brain and body signals, Machine Learning is currently the way to go. Supervised approaches use well-defined experimental data from datasets to learn statistical properties of the data that can then be used for inference and prediction on unseen, new, data. In the last decade, my work in this domain comprises the planning and execution of multimodal Electroencephalography (EEG) and functional Near Infrared Spectroscopy (fNIRS) studies, acquisition of datasets and development of offline and online pipelines for the classification and prediction of brain data using supervised linear models, such as regularized Linear Discriminant Analysis (rLDA), Common Spatial Pattern (CSP) Analysis, and more. The processing pipelines include the on-and offline implementation of preprocessing/filtering, segmentation, synchronization and feature extraction steps, as well as the classification and cross-validation itself.

Resources: - frontiers 2020 publication on the use of the GLM for fNIRS in machine learning and cross validation - classification of workload in freely moving operators in PhD Thesis at Berlin Institute of Technology (TU Berlin) - IEEE TNSRE 2016 publication of hybrid open access EEG-fNIRS dataset for BCI - Nature Scientific Data 2018 publication of hybrid open access EEG-fNIRS dataset for BCI - frontiers 2020 publication of multimodal fNIRS data and code and instructions how to add synthetic responses for validation of novel methods

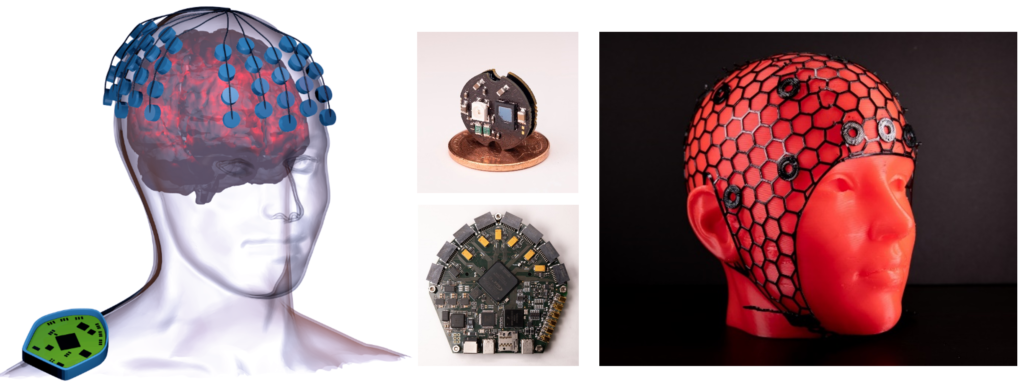

2019-20: The openfNIRS / ninjaNIRS – next generation high performance open source fNIRS instrumentation

After my development of my first generation openNIRS project and the multimodal M3BA (see below), I joined David Boas group’s work on a next generation open source functional Near Infrared Spectroscopy system (the ninjaNIRS) at Boston University’s Neurophotonics Center and MGH, Harvard Medical School. The system’s main architect is Bernhard Zimmermann. The ninjaNIRS is a fully scalable fibreless wearable fNIRS system with digital front end optodes. I contributed electronics development of auxiliary hardware (optodes, wireless trigger functionality), future architecture and strategy development and grant writing (see the NEW project on the top of this page) and lead the development of ninjaNIRS integration into a 3D printed fully customizable cap, the ninjaCap. The group is continuously driving forward the openfnirs/ninjaNIRS project and i am in no doubt that the system will be greatly appreciated by the open science community.

Resources: - openfNIRS project website - OSA Optics and the Brain 2019 publication on the ninjaNIRS by B. Zimmermann et al. - neuluce, a non-profit organization by David Boas

2015-19: M3BA – Wearable multimodal modular neuroimaging out of the lab

One of the heart pieces of my work within my PhD thesis at TU Berlin: Concept, design, prototyping and validation of miniaturized wireless instrumentation for scalable and simultaneous acquisition of Electroencephalogram (EEG), functional Near-Infrared Spectroscopy (fNIRS), Accelerometer, and other biosignals. The miniaturization and customization provides the instrumentation infrastructure for neuroimaging, monitoring and BCI outside of the laboratory and in the real world. The M3BA is fully stand alone and can be integrated in any kind of head gear and has been used in multiple research collaborations across the world (including USA, Australia, South Korea, India, …) and is currently being adopted for use in medical applications.

Resources: - IEEE TBME 2016 main publication on the M3BA architecture and validation - IEEE EMBC 2017 publication on hybrid EEG-fNIRS hardware design in IEEE EMBC Proceedings - for several more related M3BA publications and media please see the PUBLICATIONS and TALKS+MEDIA page in the years 2016-2020.) - IEEE Featured Cover Article - SPIE article 2019 on "hardwiring the brain" - Patent (US/EU/CH/CA,...) - M3BAS in PhD Thesis at Berlin Institute of Technology (TUB)

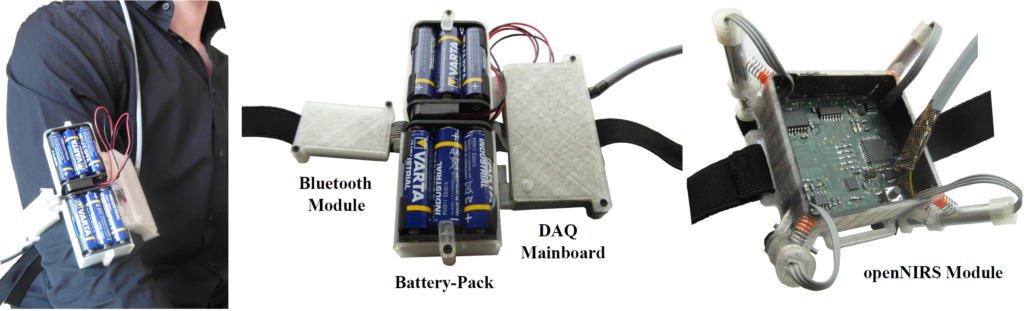

2014-15: The OpenNIRS project – Wearable, modular, open source functional Near-Infrared Spectroscopy

My first larger successful open source project: Design, prototyping, validation and documentation of miniaturized stand-alone open Near Infra-Red Spectroscopy (NIRS) hardware. It is a result of my Master Thesis in Electrical Engineering at Karlsruhe Institute of Technology. While the designs are rather low-level, simple, and performance is mediocre, the OpenNIRS project provides the resources to facilitate your own customized design of low-cost NIRS-based neuroimaging instrumentation without having to start from scratch.

Resources: - the openNIRS project website - frontiers 2015 publication of the openNIRS project - Master Thesis at Karlsruhe Institute of Technology (KIT) - openNIRS in PhD Thesis at Berlin Institute of Technology (TUB)

2011-13: Sensordevelopment for Robotic Hand Protheses @ Vincent Systems Karlsruhe

Development and validation of EMG and prosthetic joint angle sensors as a part-time working student.

Resources: - Vincent Systems website

2011: Capacitive EMG sensors for an active orthosis

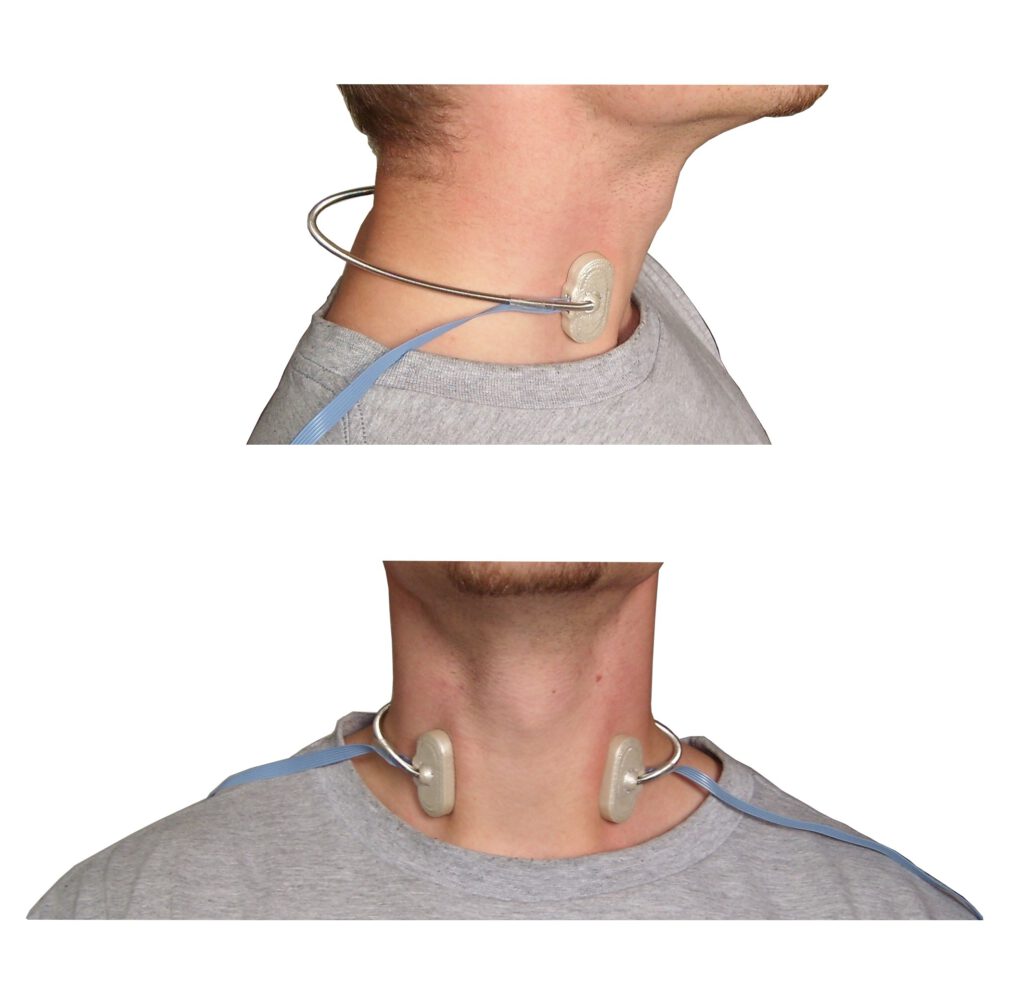

Development of capacitive active EMG electrodes to pick up muscle activity without galvanic contact – through cloth or plastic – to control an active orthosis using muscles of the neck. This was part of my Bachelor Thesis in Electrical Engineering at Karlsruhe Institute of Technology.

Resources: - Bachelor Thesis at Katlsruhe Institute of Technology (KIT) - Patent application (DE) - Conference proceedings

2008: The early days. Building an EEG for Neurofeedback

My first neurotechnology project. During my civil service in 2007, i developed a low-cost 2 bipolar EEG channel system with integrated audio- and video- feedback functionality to be able to explore neurofeedback and my own brain. Using resources from the openEEG project, repaired old computer parts, and self-made electrodes from a hammered silver coin enabled me to do my first own steps in the world of EEG for under 300 EUR – and started my way into the world of BCI and neurotechnogy. The openEEG.org project also inspired my openNIRS.org project, that would follow 6 years later.

Resources: - JugendForscht project database - publication in Young Researcher, 2009 - the openEEG project